One of the most pressing challenges in deploying deep learning at scale, especially for social media giant, Meta, is making full use of hardware for inference as well as training.

Researchers have been chipping away at this problem via various compression and pruning techniques, the most recent of which is MetaPruning, which in 2019 represented the state of the art in pruning for maximum hardware efficiency. This has been in use at Meta (although oddly, the techniques were developed by a collection of universities in Asia and are not connected with Facebook/Meta efforts).

Despite hardware efficiency gains, there is still plenty of room for improvement, according to researchers from Meta and Rice University. The team is taking a closer look at the hardware efficiencies left on the table using more traditional compression techniques for deep learning training tasks, all without sacrificing accuracy.

There is a “dilemma between the trends of efficient DNN design and modern computing platform advances. While modern computing platforms (GPUs and TPUs) have consistently advanced to favor a higher degree of parallel computing, existing efficient DNN models often adopt lightweight operations that suffer from low hardware utilization and thus inferior achievable hardware efficiency,” the team explains.

More specifically, the compute patterns end up irregular, which is especially difficult for on-device processors to handle. This is because of “their reduced data reuse opportunities [which] limit existing efficient DNNs to unleash their theoretical potential.”

In short, the goal was to build a more hardware-centric DNNs overall that can make better use of parallelism.

“How do we design efficient DNNs that can simultaneously enjoy both the powerful expressiveness of state-of-the art efficient DNN structures and boosted parallel computing capability of modern computing platforms?”

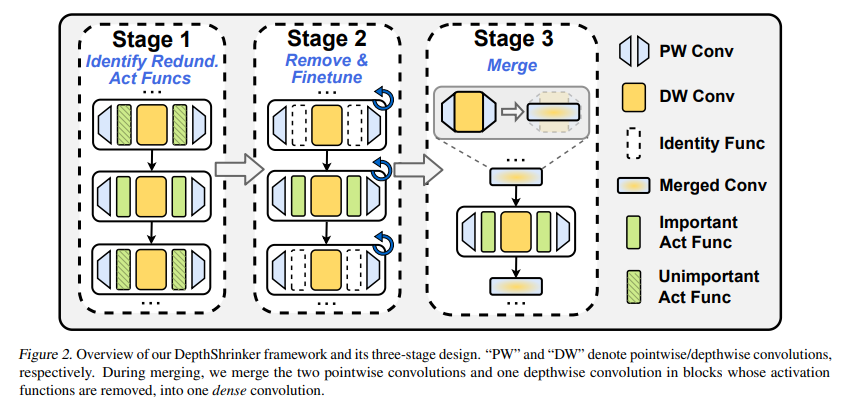

The outcome is “DepthShrinker” which focuses on hardware-aware, super-compact neural networks that can transform irregular computation patterns into tighter networks for higher throughput and accuracy. The team says their compression techniques allow “3.06 higher accuracy and 1.53X throughput on [Nvidia] Tesla V100 over state-of-the-art channel-wise pruning method, MetaPruning.”

Instead of the nice, simpler convolutional layers of days gone by, DepthShrinker takes all the irregular computation that is now the norm and merges “consecutive compact layers, between which the activation functions are learned to be unimportant for inference, into one single dense layer. DepthShrinker’s derived DNNs can largely leverage the high degree of parallelism in modern computing platforms and thus boost hardware efficiency while maintaining the original models’ accuracy.”

Because the work is meant to play out on servers as well as inferencing devices, the team tested the method on an Nvidia Tesla V100 GPU and on the desktop and edge sides, an Nvidia RTX 2080Ti and a Jetson TX2.

While the bulk of the benchmarking the team did was focused on inferencing, the same concept can be applied to training. “The vanilla design of our DepthShrinker described above leverages the insight that unimportant activation functions can be properly removed after training without hurting the inference accuracy. Excitingly, this insight can also be leveraged to improve DNN training. Specifically, we propose to train a given DNN via an Expand-then-Shrink strategy, and term it as DepthShrinker+.”

The team also extended its evaluation of DepthShrinker to edge CPUs including mobile processors like the Google Pixel 3 and Raspberry Pi 4 using batch size 1 with a lower latency result than standard approaches (Pytorch to ONNX then boiled down to TFLite).

“Extensive experiments validate our DepthShrinker wins both the high accuracy of channel-wise pruning and the decent efficiency of layer-wise pruning, opening up a cost-effective dimension for DNN compression.”Full benchmarks and more data found here.

Be the first to comment